Futures in Rust: An In-Depth Technical Analysis

2024-06-10

Introduction

In Rust, asynchronous programming leverages futures, which are essentially stackless coroutines. Unlike stackful coroutines that maintain their own stack, stackless coroutines rely on the compiler and runtime to manage state and execution. This approach allows for highly efficient and safe concurrency. This article provides an in-depth technical explanation of how futures work in Rust, focusing on the underlying mechanics and the role of the Rust compiler.

High-Level Introduction to Concurrency in Rust

Concurrency in Rust is centered around futures. A future represents a value or computation that will complete at some point in the future. Rust uses a poll-based model for asynchronous operations, where tasks are progressed by polling futures until they are ready.

What Rust Provides for Async Code

Rust’s standard library offers the fundamental components necessary for asynchronous programming:

- Future Trait: The core trait representing an asynchronous computation.

- Async/Await: Syntactic constructs for creating and working with asynchronous tasks.

- Waker: A handle to wake up a suspended task.

Rust does not include a built-in runtime for managing asynchronous tasks. Instead, external libraries like Tokio or async-std provide the necessary runtime environment.

Understanding the Need for a Runtime Library

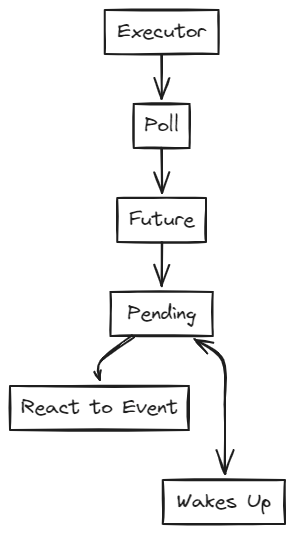

A runtime library in Rust manages the execution of futures. It involves several key components:

- Executor: Schedules and polls futures.

- Reactor: Monitors I/O events and notifies the executor.

- Waker: Wakes up tasks when they are ready to make progress.

Efficient management of these components is crucial for handling asynchronous tasks effectively.

Detailed Breakdown

What is a Future?

A future in Rust is defined by the Future trait, which looks like this:

pub trait Future {

type Output;

fn poll(self: Pin<&mut Self>, cx: &mut Context<'_>) -> Poll<Self::Output>;

}

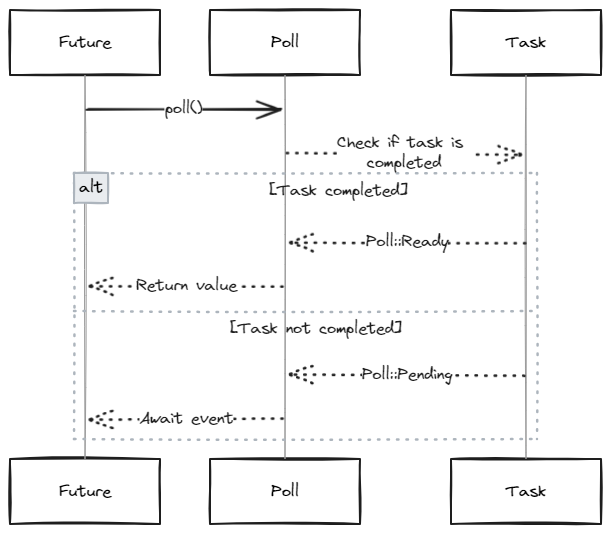

The poll method is the core of the future’s operation. It takes a Context, which provides a Waker, and returns a Poll enum, which can be either Poll::Pending or Poll::Ready(Output).

Polling a Future

When a future is polled, it performs some amount of work and returns one of two values:

- Poll::Pending: The future is not yet complete and needs to be polled again later.

- Poll::Ready: The future has completed, and its result is available.

Example of a basic future:

struct MyFuture;

impl Future for MyFuture {

type Output = i32;

fn poll(self: Pin<&mut Self>, cx: &mut Context<'_>) -> Poll<Self::Output> {

// Perform some computation

Poll::Ready(42)

}

}

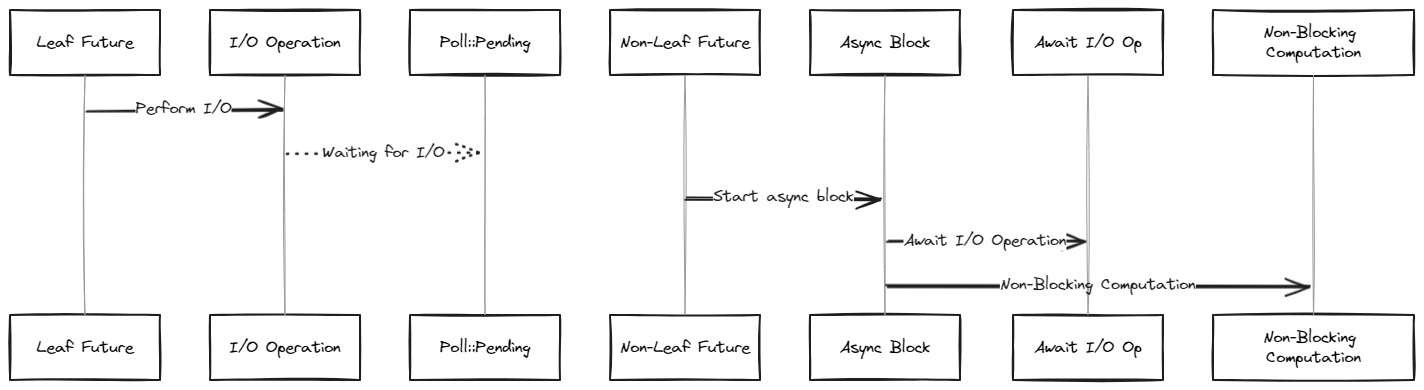

Leaf Futures

Leaf futures represent low-level operations, such as I/O tasks, that are inherently asynchronous. They are often provided by the runtime and are the fundamental building blocks of async programs.

Example of a leaf future with Tokio:

let mut stream = tokio::net::TcpStream::connect("127.0.0.1:3000");

These futures directly interact with I/O resources and are usually created by the runtime.

Non-Leaf Futures

Non-leaf futures are composed by combining other futures. They represent higher-level asynchronous tasks and are typically created by the user using the async keyword.

Example of a non-leaf future:

let non_leaf = async {

let mut stream = TcpStream::connect("127.0.0.1:3000").await.unwrap();

println!("connected!");

let result = stream.write(b"hello world\n").await;

println!("message sent!");

};

Runtimes

Rust’s approach to async programming requires an external runtime library to handle the scheduling and execution of futures. Popular runtimes include Tokio and async-std.

Tokio Example

Tokio provides a robust runtime for building async applications. Here’s an example of setting up a simple async task with Tokio:

use tokio::net::TcpStream;

#[tokio::main]

async fn main() {

let mut stream = TcpStream::connect("127.0.0.1:3000").await.unwrap();

stream.write_all(b"hello world\n").await.unwrap();

println!("message sent!");

}

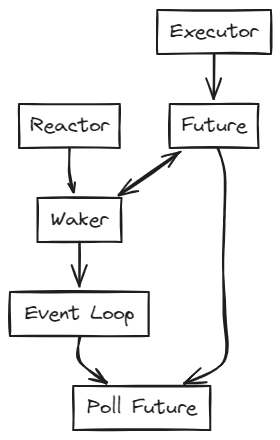

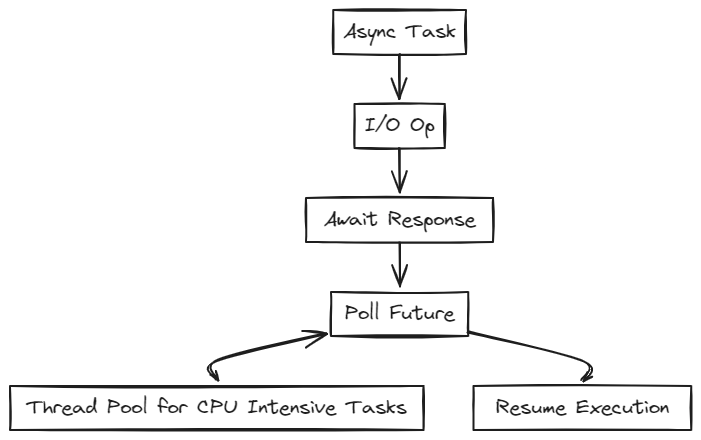

A Mental Model of an Async Runtime

An async runtime in Rust can be divided into three main parts:

- Reactor: Monitors and notifies about I/O events.

- Executor: Schedules and runs tasks.

- Future: Represents the tasks that can be paused and resumed.

Execution Flow

- Poll Phase: The executor polls a future.

- Wait Phase: The reactor registers the future for an event.

- Wake Phase: The event occurs, and the future is woken up.

This flow ensures efficient handling of asynchronous tasks without blocking the executor.

I/O vs CPU-Intensive Tasks

Non-leaf futures often involve both I/O and CPU-intensive tasks. Handling CPU-intensive tasks in an async environment requires special consideration to avoid blocking the executor thread.

Handling CPU-Intensive Tasks

- Offloading to a Thread Pool: Using

spawn_blockingto offload CPU-intensive tasks to a separate thread pool. - Supervisor Threads: Some runtimes implement supervisor threads to monitor and redistribute heavy tasks.

Example of offloading a CPU-intensive task:

use tokio::task;

let report = task::spawn_blocking(|| {

analyzer::analyze_data(response)

}).await.unwrap();

Under the Hood: How Futures Work

To understand how futures work under the hood, it’s essential to look at how the Rust compiler and runtime libraries interact to manage asynchronous tasks.

The Compiler’s Role

When you write asynchronous code using async/await, the Rust compiler transforms this code into a state machine. Each await point in your code is a potential suspension point, where the execution can be paused and resumed later. The compiler generates a series of states and the transitions between them.

State Machines

Consider the following async function:

async fn example() {

let a = some_async_function().await;

let b = another_async_function(a).await;

println!("Result: {}", b);

}

The Rust compiler translates this into something akin to:

enum ExampleState {

Start,

AwaitingSomeFunction(Pin<Box<dyn Future<Output = SomeType>>>),

AwaitingAnotherFunction(Pin<Box<dyn Future<Output = AnotherType>>>),

Done,

}

struct Example {

state: ExampleState,

output: Option<AnotherType>,

}

impl Future for Example {

type Output = AnotherType;

fn poll(self: Pin<&mut Self>, cx: &mut Context<'_>) -> Poll<Self::Output> {

match self.state {

ExampleState::Start => {

let future = some_async_function();

self.state = ExampleState::AwaitingSomeFunction(Box::pin(future));

self.poll(cx)

}

ExampleState::AwaitingSomeFunction(ref mut future) => {

match future.as_mut().poll(cx) {

Poll::Ready(value) => {

let future = another_async_function(value);

self.state = ExampleState::AwaitingAnotherFunction(Box::pin(future));

self.poll(cx)

}

Poll::Pending => Poll::Pending,

}

}

ExampleState::AwaitingAnotherFunction(ref mut future) => {

match future.as_mut().poll(cx) {

Poll::Ready(value) => {

self.output = Some(value);

self.state = ExampleState::Done;

Poll::Ready(self.output.take().unwrap())

}

Poll::Pending => Poll::Pending,

}

}

ExampleState::Done => Poll::Ready(self.output.take().unwrap()),

}

}

}

This state machine allows the async function to pause and resume execution at each await point, enabling non-blocking concurrency.

The Role of Wakers

Wakers are used to notify the executor that a future is ready to make progress. The Waker type is part of the Context passed to the poll method. When a future needs to be polled again, it calls the wake method on the waker, which schedules the future to be polled.

Creating a Waker

Creating a waker involves implementing the ArcWake trait, which defines how the waker should behave when it’s woken up:

use std::sync::{Arc, Mutex};

use std::task::{Waker, Context, Poll};

use futures::task::{waker_ref, ArcWake};

struct MyWaker {

// Shared state that the waker will modify

}

impl ArcWake for MyWaker {

fn wake_by_ref(arc_self: &Arc<Self>) {

// Implement wake-up logic

}

}

// Example usage

let my_waker = Arc::new(MyWaker { /* state */ });

let waker = waker_ref(&my_waker).clone();

let cx = &mut Context::from_waker(&waker);

// Pass `cx` to the poll method of a future

Executors

Executors are responsible for polling futures. They maintain a queue of tasks that need to be polled and manage the execution of these tasks.

Implementing an Executor

A basic executor might look like this:

use futures::task::{waker_ref, ArcWake};

use std::future::Future;

use std::sync::{Arc, Mutex};

use std::task::{Context, Poll};

use std::thread;

use std::pin::Pin;

struct Task {

future: Mutex<Pin<Box<dyn Future<Output = ()> + Send>>>,

}

impl ArcWake for Task {

fn wake_by_ref(arc_self: &Arc<Self>) {

// Wake up logic, e.g., enqueue the task to be polled again

}

}

struct Executor {

tasks: Vec<Arc<Task>>,

}

impl Executor {

fn new() -> Self {

Executor { tasks: vec![] }

}

fn spawn(&mut self, future: impl Future<Output = ()> + Send + 'static) {

let task = Arc::new(Task {

future: Mutex::new(Box::pin(future)),

});

self.tasks.push(task);

}

fn run(&self) {

while !self.tasks.is_empty() {

for task in &self.tasks {

let waker = waker_ref(task).clone();

let mut cx = Context::from_waker(&waker);

let mut future = task.future.lock().unwrap();

if let Poll::Pending = future.as_mut().poll(&mut cx) {

// Future is still pending, it will be polled again later

}

}

}

}

}

// Usage

let mut executor = Executor::new();

executor.spawn(async {

println!("Hello, world!");

});

executor.run();

This executor creates and runs tasks, polling their futures until they are ready.

I/O vs CPU-Intensive Tasks

Non-leaf futures often involve both I/O and CPU-intensive tasks. Handling CPU-intensive tasks in an async environment requires special consideration to avoid blocking the executor thread.

Handling CPU-Intensive Tasks

- Offloading to a Thread Pool: Using

spawn_blockingto offload CPU-intensive tasks to a separate thread pool. - Supervisor Threads: Some runtimes implement supervisor threads to monitor and redistribute heavy tasks.

Example of offloading a CPU-intensive task:

use tokio::task;

let report = task::spawn_blocking(|| {

analyzer::analyze_data(response)

}).await.unwrap();

Advantages and Disadvantages of Rust’s Async Model

Advantages

- Performance: Rust’s async model is highly efficient, leveraging zero-cost abstractions.

- Flexibility: Users can choose or even implement custom runtimes tailored to specific needs.

- Safety: Rust’s ownership and type system ensure memory safety and prevent data races.

Disadvantages

- Complexity: Understanding and correctly implementing futures and async tasks can be challenging.

- Manual Management: Users must manage runtimes and tasks explicitly, which can introduce complexity.

Summary

This comprehensive overview introduced Rust’s futures, exploring their design, implementation, and usage. We discussed the poll-based approach, the distinction between leaf and non-leaf futures, the necessity of runtime libraries, and handling CPU-intensive tasks. We also delved into the underlying mechanics of how the Rust compiler transforms async code into state machines and how executors and wakers work together to manage asynchronous tasks.

Understanding these concepts is crucial for efficient asynchronous programming in Rust. With this foundation, you are now prepared to explore Rust’s async capabilities further and build high-performance concurrent applications.