A Comprehensive Guide to Load Balancer Algorithms

2024-06-24

Table of Contents

- Introduction

- Fundamentals of Load Balancing

- Load Balancing Algorithms

- Comparative Analysis

- Choosing the Right Algorithm

- Advanced Considerations

- Conclusion

Introduction

Load balancing is a critical component in modern distributed systems and web architectures. It ensures optimal resource utilization, maximizes throughput, minimizes response time, and avoids overload of any single resource. This comprehensive guide delves into various load balancing algorithms, providing detailed analysis, comparisons, and guidance on selecting the most appropriate algorithm for different scenarios.

Fundamentals of Load Balancing

Load balancing aims to achieve several key objectives:

- Even Distribution: Spread workload across multiple servers.

- High Availability: Ensure service continuity even if some servers fail.

- Scalability: Easily add or remove servers to handle changing traffic demands.

- Flexibility: Adapt to various application needs and infrastructure setups.

Load Balancing Algorithms

1. Round Robin

Mechanism: Requests are distributed sequentially to each server in the pool.

Pros:

- Simple to implement and understand

- Works well for servers with similar capabilities

Cons:

- Doesn’t consider server load or response time

- May lead to uneven distribution if requests vary in processing time

Use Case: Environments with servers of similar capacity and where requests generally require similar processing time.

2. Weighted Round Robin

Mechanism: Similar to Round Robin, but servers are assigned weights determining the proportion of requests they receive.

Pros:

- Allows for fine-tuning based on server capabilities

- Useful for heterogeneous environments

Cons:

- Requires manual configuration of weights

- Doesn’t adapt automatically to changing server conditions

Use Case: Environments with known, stable differences in server capacities.

3. Least Connections

Mechanism: Directs traffic to the server with the fewest active connections.

Pros:

- Adapts to real-time server load

- Prevents overloading of any single server

Cons:

- May not be ideal for long-lived connections

- Assumes connection count accurately represents load

Use Case: Applications with variable request processing times or where server load can fluctuate rapidly.

4. Weighted Least Connections

Mechanism: Combines Least Connections with server weighting.

Pros:

- Considers both real-time load and server capacity

- Effective in heterogeneous environments

Cons:

- More complex to implement and manage

- Requires careful weight configuration

Use Case: Heterogeneous environments with varying server capacities and fluctuating workloads.

5. Least Response Time

Mechanism: Routes requests to the server with the lowest average response time and fewest active connections.

Pros:

- Optimizes for user experience by minimizing latency

- Adapts to both server load and performance

Cons:

- Requires continuous monitoring of response times

- Can be sensitive to temporary fluctuations

Use Case: Applications where minimizing latency is critical, such as real-time applications or APIs.

6. IP Hash

Mechanism: Uses a hash of the client’s IP address to determine which server receives the request.

Pros:

- Ensures session persistence without shared storage

- Consistent mapping for the same client

Cons:

- Distribution quality depends on client IP diversity

- Doesn’t adapt to server load or capacity changes

Use Case: Applications requiring session persistence without shared session storage.

7. URL Hash

Mechanism: Similar to IP Hash, but uses the requested URL to determine server selection.

Pros:

- Useful for CDN-like setups

- Can improve cache hit ratios

Cons:

- Distribution depends on URL patterns

- May lead to uneven distribution for popular content

Use Case: Content Delivery Networks or caching-heavy applications.

8. Least Bandwidth

Mechanism: Directs traffic to the server currently serving the least amount of traffic (measured in Mbps).

Pros:

- Optimizes network resource utilization

- Useful for bandwidth-intensive applications

Cons:

- Requires real-time bandwidth monitoring

- May not correlate with actual server load

Use Case: Applications with significant variations in bandwidth requirements, such as media streaming or file downloads.

9. Resource-Based (Adaptive)

Mechanism: Distributes load based on real-time analysis of server resource utilization (CPU, memory, etc.).

Pros:

- Highly efficient in distributing load based on actual server capacity

- Adapts to changing server conditions in real-time

Cons:

- Requires sophisticated monitoring and analysis capabilities

- Higher implementation and operational complexity

Use Case: Complex, resource-intensive applications in dynamic environments.

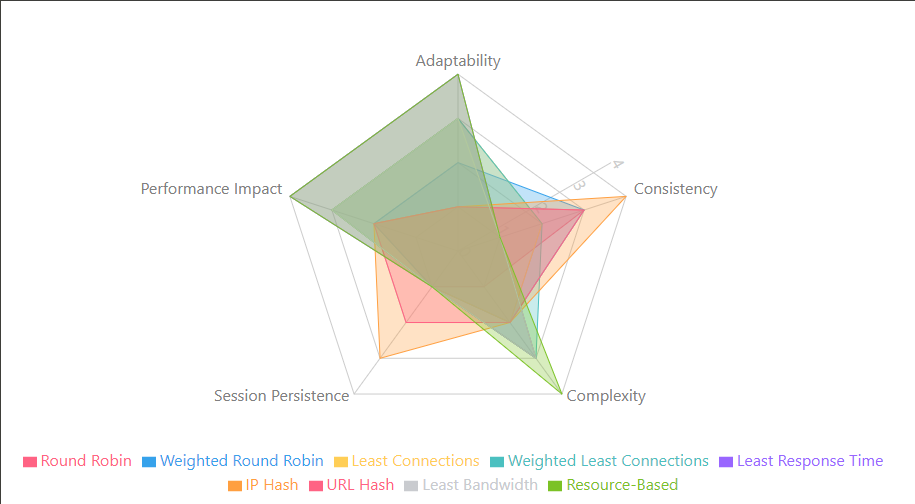

Comparative Analysis

| Algorithm | Adaptability | Consistency | Complexity | Session Persistence | Performance Impact |

|---|---|---|---|---|---|

| Round Robin | Low | High | Low | Low | Medium |

| Weighted Round Robin | Medium | High | Medium | Low | Medium |

| Least Connections | High | Medium | Medium | Low | High |

| Weighted Least Connections | High | Medium | High | Low | High |

| Least Response Time | Very High | Low | High | Low | Very High |

| IP Hash | Low | Very High | Medium | High | Medium |

| URL Hash | Low | High | Medium | Medium | Medium |

| Least Bandwidth | High | Low | High | Low | High |

| Resource-Based | Very High | Low | Very High | Low | Very High |

Choosing the Right Algorithm

Selecting the appropriate load balancing algorithm depends on various factors:

- Application Characteristics: Stateful vs. stateless, processing intensity, etc.

- Infrastructure: Homogeneous vs. heterogeneous server environment.

- Traffic Patterns: Predictable vs. bursty, uniform vs. varied processing requirements.

- Scalability Requirements: Frequency of adding/removing servers.

- Monitoring Capabilities: Ability to track server health, performance, and resource utilization.

Advanced Considerations

- Hybrid Approaches: Combining multiple algorithms for optimized performance.

- Health Checks: Implementing robust server health monitoring.

- Geographic Distribution: Considering server and client locations for global deployments.

- SSL Offloading: Handling SSL/TLS termination at the load balancer.

- Content-Aware Load Balancing: Routing based on request content or type.

Conclusion

Load balancing is a crucial aspect of maintaining efficient, scalable, and reliable distributed systems. While each algorithm has its strengths and ideal use cases, the best choice often depends on the specific requirements of your application and infrastructure.

Key takeaways:

- Simple algorithms like Round Robin can be effective for many scenarios.

- Dynamic algorithms (e.g., Least Connections, Resource-Based) offer better adaptability to changing conditions.

- Consider session persistence needs, especially for stateful applications.

- Regular monitoring and adjustment of your load balancing strategy is crucial as your system evolves.

Remember, many modern load balancers allow for dynamic algorithm selection or hybrid approaches, enabling further optimization based on real-time conditions. As you implement and refine your load balancing strategy, conduct thorough testing under various conditions to ensure optimal performance and reliability.