Reactor-Executor Pattern: A Deep Dive

2024-06-11

The Reactor-Executor pattern is a powerful architectural approach for building highly scalable and responsive concurrent applications. It separates the concerns of event demultiplexing and event handling, enhancing both scalability and manageability. This article explores the pattern in depth, discussing its components, benefits, and implementation details. We’ll provide examples in Rust to illustrate its practical application.

Understanding the Reactor-Executor Pattern

Reactor Pattern

The Reactor pattern handles service requests delivered concurrently to an application by one or more clients. It demultiplexes and dispatches these service requests, allowing the application to handle multiple events efficiently.

Key Components of the Reactor Pattern

- Handles: Resources on which events occur, such as network sockets or file descriptors.

- Synchronous Event Demultiplexer: An event loop that waits for events on a set of handles and returns those on which events occurred.

- Event Handlers: Callback methods that process the events occurring on the handles.

- Reactor: Manages the event handlers and the synchronous event demultiplexer.

Executor Pattern

The Executor pattern decouples task submission from execution. It provides a way to manage and execute asynchronous tasks without directly managing threads.

Key Components of the Executor Pattern

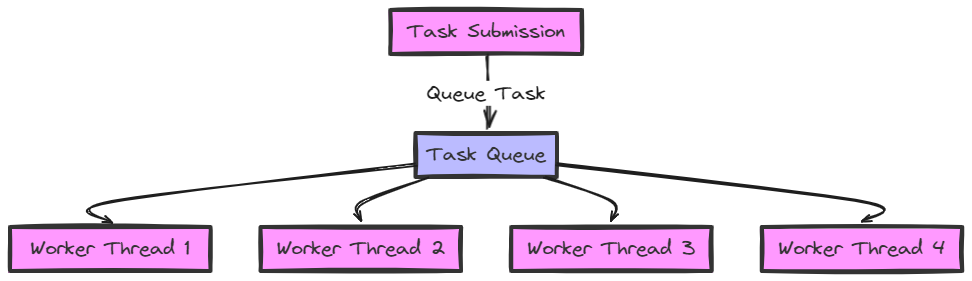

- Tasks: Units of work that need to be executed.

- Executor: Manages the execution of asynchronous tasks, typically using a thread pool.

- Thread Pool: A collection of worker threads that execute asynchronous tasks.

Combining Reactor and Executor Patterns

The Reactor-Executor pattern combines these two patterns to handle events efficiently and manage task execution in a scalable manner.

Key Components of the Reactor-Executor Pattern

- Reactor: Manages event demultiplexing and dispatching.

- Event Handlers: Define actions to be performed when an event occurs.

- Executor: Manages a pool of threads and executes tasks submitted by event handlers.

- Thread Pool: Provides threads for executing tasks managed by the executor.

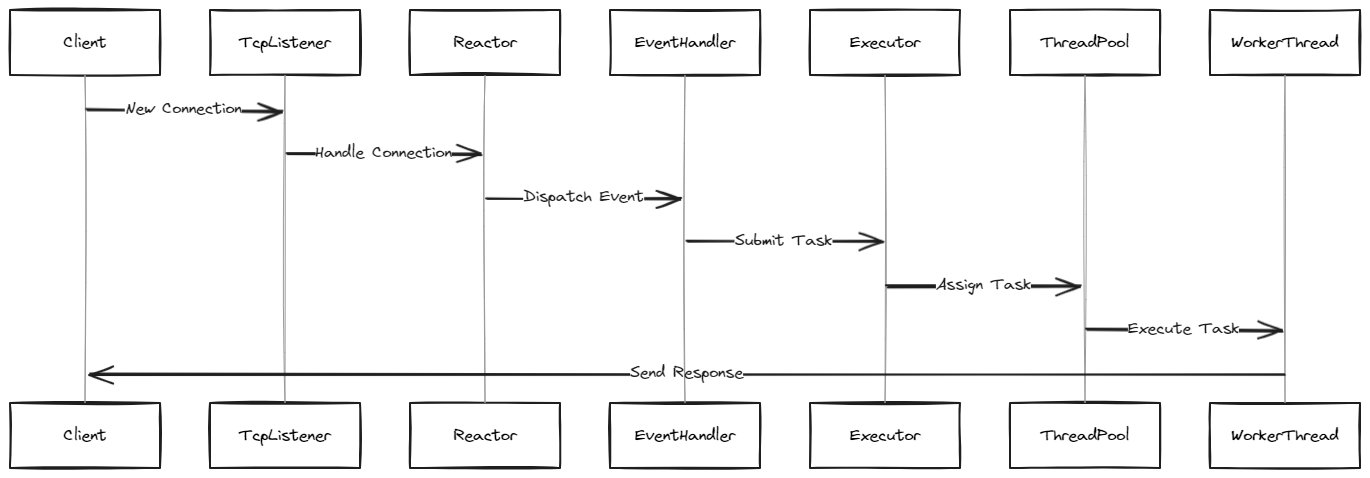

Workflow

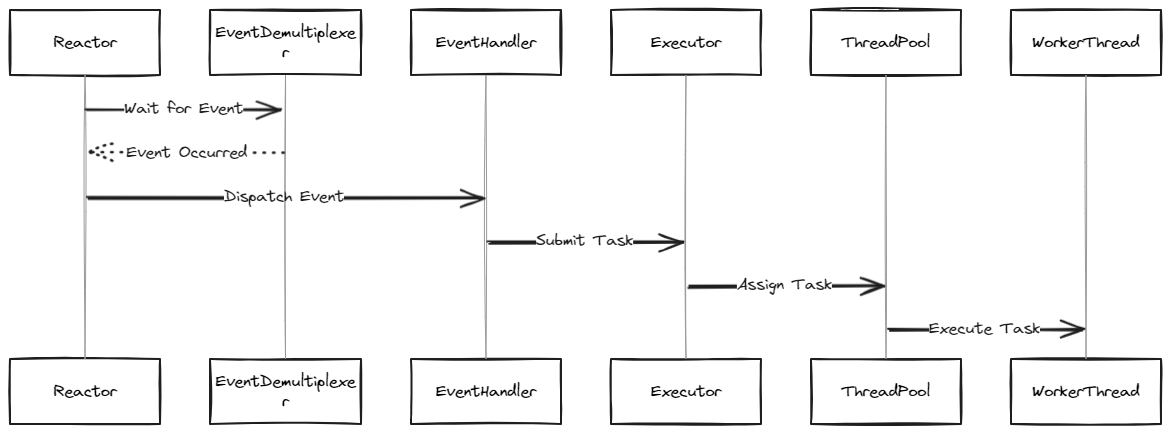

- Event Occurrence: An event occurs on a handle.

- Event Demultiplexing: The Reactor waits for events and, upon occurrence, demultiplexes the event to identify the associated handle.

- Event Dispatching: The Reactor dispatches the event to the corresponding event handler.

- Task Submission: The event handler processes the event and submits the corresponding task to the executor.

- Task Execution: The executor assigns the task to a thread from the thread pool, where it is executed asynchronously.

Detailed Workflow Example

Event Occurrence and Demultiplexing

When an event occurs, such as a new incoming network connection or data being available on an existing connection, it is associated with a specific handle. The synchronous event demultiplexer (e.g., epoll in Linux or kqueue in BSD systems) waits for events on these handles. When an event occurs, it returns the handles that have events ready to be processed.

Event Dispatching

The Reactor, which manages the event handlers and the demultiplexer, identifies the handle associated with the event. It then dispatches this event to the appropriate event handler. Each event handler is responsible for processing events from specific handles.

Task Submission and Execution

Once the event handler processes the event (e.g., reading data from a socket), it may need to perform additional tasks such as processing the data or responding to the client. Instead of performing these tasks directly in the event handler, the handler submits these tasks to the executor.

The executor manages a pool of threads and assigns the submitted tasks to these threads for execution. This allows the Reactor to remain responsive, as the heavy lifting of task execution is offloaded to the executor.

Reactor-Executor Pattern Diagram

Reactor-Executor Pattern in Rust

use std::net::{TcpListener, TcpStream};

use std::io::{Read, Write};

use std::thread;

use threadpool::ThreadPool;

use std::sync::mpsc::{self, Sender, Receiver};

use std::sync::{Arc, Mutex};

use std::collections::HashMap;

fn main() {

let listener = TcpListener::bind("127.0.0.1:9999").unwrap();

let pool = ThreadPool::new(4);

let (event_sender, event_receiver) = mpsc::channel();

let event_receiver = Arc::new(Mutex::new(event_receiver));

println!("Server started...");

thread::spawn(move || {

event_loop(event_receiver, pool);

});

for stream in listener.incoming() {

match stream {

Ok(stream) => {

let sender = event_sender.clone();

sender.send(Event::NewConnection(stream)).unwrap();

}

Err(e) => {

eprintln!("Failed to accept a connection: {}", e);

}

}

}

}

enum Event {

NewConnection(TcpStream),

DataReceived(TcpStream, Vec<u8>),

}

fn event_loop(event_receiver: Arc<Mutex<Receiver<Event>>>, pool: ThreadPool) {

loop {

let event = event_receiver.lock().unwrap().recv().unwrap();

match event {

Event::NewConnection(stream) => {

let pool = pool.clone();

pool.execute(move || handle_connection(stream, pool));

}

Event::DataReceived(mut stream, data) => {

let response = process_data(&data);

stream.write(response.as_bytes()).unwrap();

stream.flush().unwrap();

}

}

}

}

fn handle_connection(mut stream: TcpStream, pool: ThreadPool) {

let mut buffer = [0; 1024];

match stream.read(&mut buffer) {

Ok(bytes_read) => {

let data = buffer[..bytes_read].to_vec();

let sender = pool.clone();

sender.execute(move || {

event_loop::send(Event::DataReceived(stream, data)).unwrap();

});

}

Err(e) => {

eprintln!("Failed to read from connection: {}", e);

}

}

}

fn process_data(data: &Vec<u8>) -> String {

// Simulate data processing

String::from_utf8_lossy(data).to_uppercase()

}

Explanation

- TcpListener: Listens for incoming TCP connections.

- ThreadPool: Manages a pool of threads for handling tasks concurrently.

- Event Channel: Used to send events between threads.

- event_loop: Processes events received from the channel.

- handle_connection: Reads data from the connection, processes it, and sends a response back to the client.

Event Channel

The event channel is a critical component in this architecture. By using an MPSC (multiple-producer, single-consumer) channel, we allow multiple producers (the Reactor handling new connections) to send events to a single consumer (the event loop). This ensures thread-safe communication between different parts of the application.

Arc and Mutex

We use Arc (Atomic Reference Counting) and Mutex to share ownership of the event receiver across multiple threads safely. Arc ensures that the receiver can be accessed concurrently, while Mutex ensures that only one thread can access the receiver at a time, preventing data races.

Detailed Rust Code Explanation

Main Function

- TcpListener::bind: Binds to the specified address and starts listening for incoming TCP connections.

- ThreadPool::new: Creates a thread pool with the specified number of worker threads.

- mpsc::channel: Creates a new MPSC channel for event communication.

- Arc::new and Mutex::new: Wraps the event receiver in

ArcandMutexto allow safe concurrent access. - thread::spawn: Spawns a new thread running the event loop.

Event Loop

- loop: Continuously runs to process incoming events.

- event_receiver.lock(): Locks the receiver to safely receive events.

- match event: Matches the received event type and processes accordingly.

Handle Connection

- stream.read: Reads data from the stream.

- buffer.to_vec(): Converts the read data into a vector.

- pool.execute: Submits a new task to the thread pool for processing the data.

Process Data

- String::from_utf8_lossy: Converts the received bytes into a string, handling invalid UTF-8 sequences gracefully.

- to_uppercase: Simulates data processing by converting the string to uppercase.

Event Loop and Task Submission

Detailed Workflow of Event Handling

Internal Mechanism of Thread Pool

Behind the Scenes

Rust Implementation

In the Rust example, the TcpListener is used to listen for incoming connections. For each accepted connection, a task is submitted to the thread pool using the execute method. The handle_connection function reads data from the stream, processes it, and sends a response. The thread pool manages the execution of tasks, distributing them across multiple threads to handle concurrent connections efficiently.

Performance Considerations

Thread Management

The thread pool ensures efficient management of threads. By reusing a fixed number of threads, it avoids the overhead associated with creating and destroying threads, thus providing better performance and resource utilization.

Lock Contention

Using Arc and Mutex introduces some overhead due to lock contention. However, this is necessary to ensure thread-safe access to shared resources. In performance-critical applications, minimizing the scope and frequency of locks can help reduce this overhead.

Benefits of the Reactor-Executor Pattern

- Scalability: Efficiently handles a large number of concurrent connections.

- Separation of Concerns: Decouples event demultiplexing from task execution, leading to cleaner and more maintainable code.

- Resource Management: Efficiently manages resources such as threads, avoiding the overhead associated with creating and destroying threads.

- Responsiveness: Ensures the application remains responsive even under heavy load by using asynchronous task execution.

Conclusion

The Reactor-Executor pattern is a robust architectural approach for building scalable and responsive concurrent applications. By separating event demultiplexing from task execution, it provides a clean and efficient way to manage multiple concurrent requests. Implementing this pattern in Rust demonstrates its versatility and effectiveness in different programming environments. Understanding and applying the Reactor-Executor pattern can significantly enhance the performance and maintainability of your concurrent applications.